A very interesting report surfaced in front of me today. It is Information Week’s IT Pro ranking of Data Deduplication vendors, just made available a few weeks ago, and it is the overview of the dedupe market so far.

It surveyed over 400 IT professionals from various industries with companies ranging from less than 50 employees to over 10,000 employees and revenues of less than USD5 million to USD1 billion. Overall, it had a good mix of respondents. But the results were quite interesting.

It surveyed 2 segments

- Overall performance – product reliability, product performance, acquisition costs, operations costs etc.

- Technical features – replication, VTL, encryption, iSCSI and FCoE support etc.

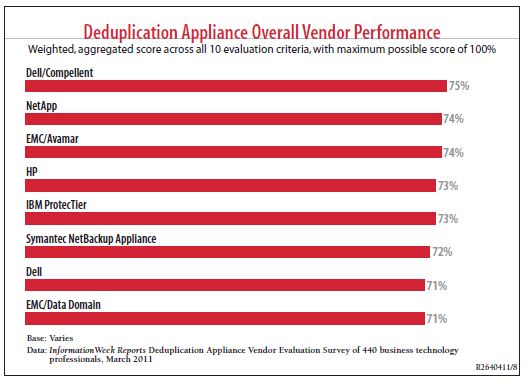

When I saw the results (shown below), surprise, surprise! Here’s the overall performance survey chart:

Dell/Compellent scored the highest in this survey while EMC/Data Domain ranked the lowest. However, the difference between the first place and the last place vendor is only 4%, and this is to suggest that EMC/Data Domain was about just as good as the Dell/Compellent solution, but it scored poorly in the areas that matters most to the customer. In fact, as we drill down into the requirements of the overall performance one-by-one, as shown below,

there is little difference among the 7 vendors.

However, when it comes to Technical Features, Dell/Compellent is ranked last, the complete opposite. As you can see from the survey chart below, IBM ProtecTier, NetApp and HP are all ranked #1.

The details, as per the technical requirements of the customers, are shown below:

These figures show that the competition between the vendors is very, very stiff, with little edge difference from one to another. But what I was more interested were the following findings, because these figures tell a story.

In the survey, only 34% of the respondents say they have implemented some data deduplication solutions, while the rest are evaluating and plan to evaluation. This means that the overall market is not saturated and there is still a window of opportunity for the vendors. However, the speed of the a maturing data deduplication market, from early adopters perhaps 4-5 years ago to overall market adoption, surprised many, because the storage industry tend to be a bit less trendy than most areas of IT. With the way the rate of data deduplication is going, it will be very much a standard feature of all storage vendors in the very near future.

The second figures that is probably not-so-surprising is, for most of the customers who have already implemented the data deduplication solution, almost 99% are satisfied or somewhat satisfied with their solutions. Therefore, the likelihood of these customer switching vendors and replacing their gear is very low, perhaps partly because of the reliability of the solution as well as those products performing as they should.

The Information Week’s IT Pro survey probably reflected well of where the deduplication market is going and there isn’t much difference in terms of technical and technology features from vendor to vendor. Customer will have to choose beyond the usual technology pitch, and look for other (and perhaps more important) subtleties such as customer service, price and flexibility of doing business with. EMC/Data Domain, being king-of-the-hill, has not been the best of vendor when it comes to price, quality of post-sales support and service innovation. Let’s hope they are not like the EMC sales folks of the past, carrying the “Take it or leave it” tag when they develop their relationship with their future customers. And it will not help if word-of-mouth goes around the industry about EMC’s arrogance of their dominance. It may not be true, and let’s hope it is not true because the EMC of today has changed plenty compared to the Symmetrix days. EMC/Data Domain is now part of their Backup Recovery Service (BRS) team, and I have good friends there at EMC Malaysia and Singapore. They are good guys but remember guys, customer is still king!

Dell, new with their acquisition of Compellent and Ocarina Networks, seems very eager to win the business and kudos to them as well. In fact, I heard from a little birdie that Dell is “giving away” several units of Compellents to selected customers in Malaysia. I did not and cannot ascertain if this is true or not but if it is, that’s what I call thinking-out-of-the-box, given Dell as a late comer into the storage game. Well done!

One thing to note is that the survey took in 17 vendors, including Exagrid, Falconstor, Quantum, Sepaton and so on, but only the top-7 shown in the charts qualified.

In the end, I believe the deduplication vendors had better scramble to grab as much as they can in the coming months, because this market will be going, going, gone pretty soon with nothing much to grab after that, unless there is a disruptive innovation to the deduplication technology