I promised last week I will look deeper into HP StoreOnce technology and I did. As I mentioned in my previous blog, HP StoreOnce technology now embedded in its D2D series of secondary, target backup devices that does the job with no fuss and no fancy bells and whistles.

Here’s the lineup of the present HP D2D solutions.

HP Malaysia has constantly reminded me that their D2D deduplication solution is much more price competitive than their competitors and this is something you, the readers, have to find out on your own. But I do believe that they are. Unfortunately they did not have the first mover’s advantage when Data Domain took the industry by storm in 2009, since HP StoreOnce was only launched with much fanfare last year in June 2010. Despite that, there still plenty of room in the IT market to grow, especially in HP’s huge set of customers.

Without the first movers advantage, HP StoreOnce has to differentiate itself from the existing competitors such as EMC Data Domain and Quantum. Labeling their deduplication technology as version 2.0 (whereas the competitors are still at “Version 1.0”?), HP StoreOnce banks on 3 key technologies. They are

- Sparse Indexing

- Intelligent Block Size Management

- Reduction in Disk Fragmentation

Out of these 3, sparse indexing is the most interesting but I will save the best from last. Let’s start with Intelligent Block Size Management.

HP StoreOnce uses a variable chunking method with a smaller granularity of 4K in size and this is managed intelligently, thus achieving a higher deduplication ratio compared to its competitors which either uses a fixed chunking method or with a variable chunking method of larger block sizes in the range of 8K to 32K. The HP Lab’s testing reveals that the space savings was significant when compared with others.

Below are a set of results for a PowerPoint presentation and you can see for yourself.

(NOTE: Please note that the savings/deduplication ratio can be very different and can range from good to bad for different types of data. Video and images files are highly encoded. Seismic and geo-mapping files are highly compressed. It is very likely that most deduplication solutions cannot achieve a high percentage with these types of files)

Point #2 talks about Reduction in Disk Fragmentation. The inherent benefits from Intelligent Block Size Management brings about the Reduction in Disk Fragmentation. The smaller chunks means lesser space wastage, especially when the block size is 4K or lower. HP StoreOnce also uses an intelligent algorithm to place the blocks that are perceived to be related close to one another. Hence this “locality” presence helps and the retrieval and restore process will be faster and more efficient.

Sparse Indexing is where HP StoreOnce touts to be a game changer. Today’s data is already as massive as a mountain, and it’s going to get bigger and growing faster. Using “Version 1.0” type of deduplication, the hashes created are stored in either memory or on disks. However, the massive data sets (especially unstructured data) are already producing massive amounts of hashes. Hashes are used to identify unique data blocks but the avalanche of unstructured data means that most deduplication solutions are generating more and more hashes, making most Version 1.0s hashes sluggish and difficult to retrieve.

Sparse Indexing addresses this hash problem (by the way, HP StoreOnce uses SHA-1 hash) by intelligently sampling a small chunks and creating a very fast index lookup mechanism that stays in the system’s memory all the time. As the engineers at HP Labs put it

Instead of holding every index item in RAM ready for comparison, the HP team keeps just one in every hundred or so items in RAM and puts the rest onto a hard drive. Duplicate data almost always arrives in bursts. In other words, if one chunk of the arriving stream is a duplicate, it is very likely that many following chunks are duplicates. Sparse indexing takes advantage of this phenomenon by storing the sequence of hashes of the stored chunks next to each other on disk. As a result, a ‘hit’ in the sample RAM index can direct the system to an area of the disk where many duplicates are likely to be found.

Sparse Indexing is not unique in the industry, but the engineers at HP Labs have put their thinking hats on and applied it to improve the search and looking up of the hashes in the StoreOnce deduplication technology.

Further savings are also achieved when the deduped data is compressed with the LZ (Lempel-Ziv) compression method before it is stored into the disks.

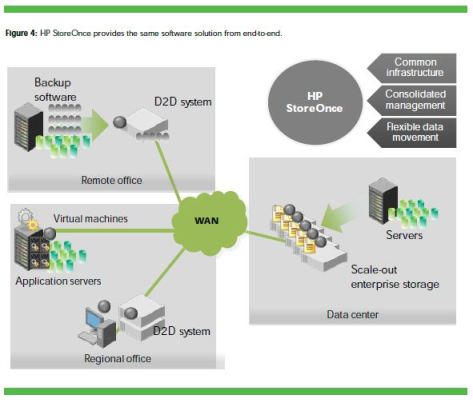

The HP StoreOnce technology is 100% fully concocted in the renown HP Labs and according to sources, this technology will indeed permeate across all HP StorageWorks (HP has since renamed it to HP Storage) line. With this strategy, HP hopes to address the “fragmented and complicated” (as quoted by HP) deduplication and data protection strategy across the enterprise. By “fragmented and complicated”, they mean that the deduplicated data constant has to be rehydrated and deduped again as the data moves across different IT devices and functions.

In a perfect world, HP wants their StoreOnce technology to be like the diagram below.

However, one very interesting fact that I found was HP does not believe that primary storage deduplication is a good idea. They claim that it complicates the whole thing. Whether HP likes it or not, NetApp has been dishing out primary storage deduplication for several years now and you don’t see their customers unhappy with NetApp about this feature.

In one of the HP Business whitepapers I read, one of the takeaways was

I was like, “Whoa! What’s this?”. I felt bemused about what was mentioned in the whitepaper. After all the best claims of the HP StoreOnce technology, I can’t help but to think that this could be a banana skin on the pavement for HP.