Does your IT have bottomless budget? If not, storage tiering is likely to be considered as one of IT’s weapons to combat the ever growing need for storage capacity.

Storage tiering is not new and in the past, features such as HSM (Hierarchical Storage Management) and ILM (Information Lifecycle Management) addresses storage tiering in different capacities, ranging for simple aging files movement and migration, to data objects being moved within the data infrastructure of an organization with some kind of workflow and searching capabilities.

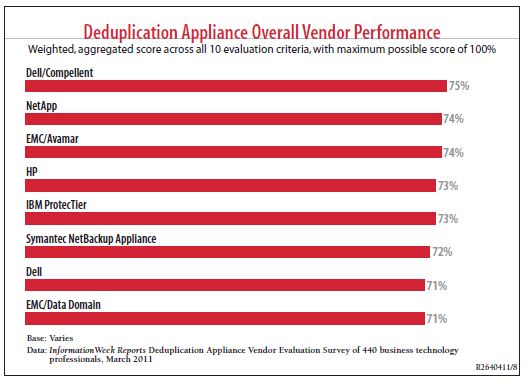

Lately, storage tiering, and especially automated storage tiering, has been gaining prominence, thanks to the 2 high profile acquisitions – HP 3PAR and Dell Compellent. According to Wikibon,

Tiered storage is a system of assigning applications to different

types of storage media based on application requirements. Factors

considered in the allocation of storage type include the level of

protection needed, performance requirements, speed of recovery,

and many other considerations.Since assigning application data to

specific media may be complex, some vendors provide software for

automatically managing the process.

For the sake of simplicity, this blog talks about automated storage tiering within the storage array itself, where different data blocks are moved within several tiers to achieve just-right storage provisioning. Why do we need to achieve this “just-right provisioning”? Rather than discussing this from an IT, technical angle, the just-right storage provisioning should be addressed from a business and operational angle, and more rightly so, costs and benefits.

Business and operations are about managing costs and increasing profits. In the past, many storage administrators employ a single storage tier architecture. Using the same type of disks, for example, 146G 10,000RPM Fibre Channel disks, there was usually 1 or 2 RAID levels for the entire data storage requirement. Usually RAID 1+0 volumes/LUNs are for the applications that require the highest performance and availability but they come with a big cost. So, the rest of the data are kept in RAID-5 volumes/LUNs. The introduction of enterprise SATA hard disk drives basically changed the rules of the ball game, giving storage administrators another option, a cheaper alternative to store their data. Obviously, storage vendors saw the great need to address this requirement, and hence created automated storage tiering as part of their offerings.

There are quite a few storage solutions that offers the storage tiering feature, and most of them are automated as well, meaning that the data blocks are moved between the different tiers of storage within the array itself automatically. 3PAR, long before they were acquired by HP, had their Dynamic Autonomic Tiering. Today, with HP, 3PAR offers 2 key strengths in their Autonomic Tiering offering.

- Adaptive Optimization

- Dynamic Optimization

As HP puts it,

Not to be outdone, Compellent (also long before its acquisition by Dell) had the Data Progression feature as part of the Automated Storage Tiering offering. In a nutshell, their solution (which is basically similar from a 10,000 feet view with most of the competitors) is shown below.

The idea is to put the most frequently accessed data blocks to the most expensive, fastest, storage tier and then dynamically move the lesser accessed data block to the least expensive, most economical tier.

I have had the privilege to learn more about Compellent (before Dell) technology about 2.5 years ago, thanks to my friends Chyr and Winston, the bosses at Impact Business Solutions. And what Compellent has was pretty cool stuff and I would like to share what I have picked up about Dell Compellent storage solution. But some of the information could be a little out of date.

The foundation of Dell Compellent automated storage tiering feature, called Data Progression, is their Dynamic Block Architecture (as shown below)

From a high level, all data blocks are bunched together into a logical data structure called a page. A page is by default 2MB but can be configured between 512KB and 4MB. The page is the granular unit required to initiate and implement the Data Progression feature in Compellent’s automated storage tiering solution. Every page comes with attached metadata about the page such as

- When was this page created

- When was this page last accessed

- Which RAID level is it currently in (RAID 1+0, RAID-59, RAID-55 and so on)

- Which Tier does it currently reside (Tier 1, 2 or 3)

- Which kind of disk track does it live in (Fast or Standard)

Meanwhile, there are different storage Tiers and notably, Tier 1, 2 or 3 where different disk profiles reside. Typically, the SSDs or the 15K RPM disk drives will be in Tier 1, the 10K RPM disk drives will be in Tier 2 and the slowest 7200 RPM disks will be in Tier 3. Each of the 3 tiers are further divided into the outer Fast disk cylinders (where the platters spin the fastest) and the Standard disk cylinders (running in the inner tracks and slower).

As data chunks or blocks are accessed, their frequency of access and their data movement statistics are gathered in real-time, giving the Compellent solution a fairly good intelligence of how the pages should be laid out on the most relevant tiers. As the pages become more stale, and less relevant, the pages of data chunks are progressively relegated to the lower tiers, while the more active, and most relevant pages relative to importance of access, is progressively promoted to the higher tiers.

Different policies can also be configured to ensure that some important pages stay where they are regardless of their frequency of access or their relevance.

There is a very nice whitepaper from Dell detailing their Data Progression technology.

Another big automated storage tiering player is HP 3PAR. I admit that I don’t know the inner details of the HP 3PAR Dynamic Tiering solution, though I had some glossy lessons from a 3PAR Systems Engineer called Nathan Boeger (thanks to my friends at PTC Singapore, the 3PAR distributor back then) about the same time I learned about Dell Compellent. I hope HP can offer to introduce more in depth of how the 3PAR technology works, now that I have gotten cosy with some of the HP Malaysia’s folks.

Similarly, the other big boys are offering the automated storage tiering solution as well. IBM has been offering Easy Tier for almost 18 months and EMC has its FAST2 for about the same time.

Funnily, the odd one out in this automated storage tiering game is NetApp. I was in a partner conference call about 1 year ago and there were questions asking NetApp about their views of automated storage tiering. At that time of the concall, NetApp did not believe in automated storage tiering, preferring to market their FlashCache PCIe (previously called the PAM card) solution. Take note that the FlashCache is a Read-Only “extension” to their NVRAM, and used to accelerate read operations of WAFL. And also take note that NetApp, at the time of writing, does not have an “engine” that performs automated storage tiering, regardless of how they spin it.

There are also host-based file tiering solutions as well.Since I am familiar with the NetApp universe, Arkivio and Enigma Data Solutions are 2 of the main partners that NetApp works with. Recently NetApp also resells StorNext from Quantum. But note that these host-based solutions are file-based, making them less granular, less dynamic and less efficient. They are usually marketed as file archiving solutions, and the host-based license are usually charged by per TB. In large enterprises, this might make sense but for the everyday Joes (with tight IT budgets), host-based file archiving solutions are expensive. And it is nowhere close to the efficiencies of automated storage tiering.

Overall, automated storage tiering, when applied, should help the IT operations and the organization’s business reduce costs. There is no longer a one-size-fit-all model and associating the right storage tier to the relevance and importance of the data at a very granular sub-LUN/sub-volume level will help any organization define a more prudent approach in managing their data actively and more importantly their cost of operations.

This is called Responsible IT. 😀