I am a bit surprised that primary storage deduplication has not taken off in a big way, unlike the times when the buzz of deduplication first came into being about 4 years ago.

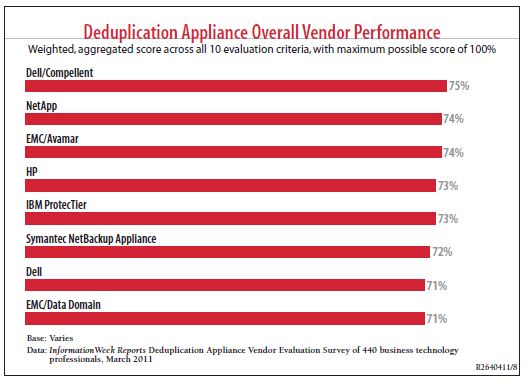

When the first deduplication solutions first came out, it was particularly aimed at the backup data space. It is now more popularly known as secondary data deduplication, the technology has reduced the inefficiencies of backup and helped sparked the frenzy of adulation of companies like Data Domain, Exagrid, Sepaton and Quantum a few years ago. The software vendors were not left out either. Symantec, Commvault, and everyone else in town had data deduplication for backup and archiving.

It was no surprise that EMC battled NetApp and finally won the rights to acquire Data Domain for USD$2.4 billion in 2009. Today, in my opinion, the landscape of secondary data deduplication has pretty much settled and matured. Practically everyone has some sort of secondary data deduplication technology or solution in place.

But then the talk of primary data deduplication hardly cause a ripple when compared a few years ago, especially here in Malaysia. Yeah, the IT crowd is pretty fickle that way because most tend to follow the trend of the moment. Last year was Cloud Computing and now the big buzz word is Big Data.

We are here to look at technologies to solve problems, folks, and primary data deduplication technology solutions should be considered in any IT planning. And it is our job as storage networking professionals to continue to advise customers about what is relevant to their business and addressing their pain points.

I get a bit cheesed off that companies like EMC, or HDS continue to spend their marketing dollars on hyping the trends of the moment rather than using some of their funds to promote good technologies such as primary data deduplication that solve real life problems. The same goes for most IT magazines, publications and other communications mediums, rarely giving space to technologies that solves problems on the ground, and just harping on hypes, fuzz and buzz. It gets a bit too ordinary (and mundane) when they are trying too hard to be extraordinary because everyone is basically talking about the same freaking thing at the same time, over and over again. (Hmmm … I think I am speaking off topic now .. I better shut up!)

We are facing an avalanche of data. The other day, the CEO of Nexenta used the word “data tsunami” but whatever terms used do not matter. There is too much data. Secondary data deduplication solved one part of the problem and now it’s time to talk about the other part, which is data in primary storage, hence primary data deduplication.

What is out there? Who’s doing what in term of primary data deduplication?

NetApp has their A-SIS (now NetApp Dedupe) for years and they are good in my books. They talk to customers about the benefits of deduplication on their FAS filers. (Side note: I am seeing more benefits of using data compression in primary storage but I am not going to there in this entry). EMC has primary data deduplication in their Celerra years ago but they hardly talk much about it. It’s on their VNX as well but again, nobody in EMC ever speak about their primary deduplication feature.

I have always loved Ocarina Networks ECO technology and Dell don’t give much hoot about Ocarina since the acquisition in 2010. The technology surfaced a few months ago in Dell DX6000G Storage Compression Node for its Object Storage Platform, but then again, all Dell talks about is their Fluid Data Architecture from the Compellent division. Hey Dell, you guys are so one-dimensional! Ocarina is a wonderful gem in their jewel case, and yet all their storage guys talk about are Compellent and EqualLogic.

Moving on … I ought to knock Oracle on the head too. ZFS has great data deduplication technology that is meant for primary data and a couple of years back, Greenbytes took that and made a solution out of it. I don’t follow what Greenbytes is doing nowadays but I do hope that the big wave of primary data deduplication will rise for companies such as Greenbytes to take off in a big way. No thanks to Oracle for ignoring another gem in ZFS and wasting their resources on pre-sales (in Malaysia) and partners (in Malaysia) that hardly know much about the immense power of ZFS.

But an unexpected source coming from Microsoft could help trigger greater interest in primary data deduplication. I have just read that the next version of Windows Server OS will have primary data deduplication integrated into NTFS. The feature will be available in Windows 8 and the architectural view is shown below:

The primary data deduplication in NTFS will be a feature add-on for Windows Server users. It is implemented as a filter driver on a per volume basis, with each volume a complete, self describing unit. It is cluster aware, and fully crash consistent on all operations.

The technology is Microsoft’s own technology, built from scratch and will be working to position Hyper-V as an strong enterprise choice in its battle for the server virtualization space with VMware. Mind you, VMware already has a big, big lead and this is just something that Microsoft must do-or-die to keep Hyper-V playing catch-up. Otherwise, the gap between Microsoft and VMware in the server virtualization space will be even greater.

I don’t have the full details of this but I read that the NTFS primary deduplication chunk sizes will be between 32KB to 128KB and it will be post-processing.

With Microsoft introducing their technology soon, I hope primary data deduplication will get some deserving accolades because I think most companies are really not doing justice to the great technologies that they have in their jewel cases. And I hope Microsoft, with all its marketing savviness and adeptness, will do some justice to a technology that solves real life’s data problems.

I bid you good luck – Primary Data Deduplication! You deserved better.